A Malachite simulator to facilitate experimentation

Summary: I coded a small tool called malachite-simulator. This is meant as a playground to facilitate experimentation in Rust with new abstractions on top of Malachite core libraries. I used the simulator to build two proof of concepts, including a toy integration with reth.

I've always been fascinated by the complexity of distributed algorithms. To try to understand a consensus algorithm is an exercise in humility and patience. Tools that facilitate understanding of such systems are therefore some of the most fun & meaningful coding projects for me.

Overview

The simulator I wrote for Malachite is extremely simple. It instantiates the Malachite core libraries into an abstraction called an Application. The application does not do much, it mainly sends values to be proposed to Malachite and interprets the decisions. Like in the replicated state machine design pattern, the application is replicated across multiple instances. In the case of the simulator, replication does not help with fault-tolerance or scalability. Instead, we replicate the application to get a feel for how programming on top of Malachite would be like.

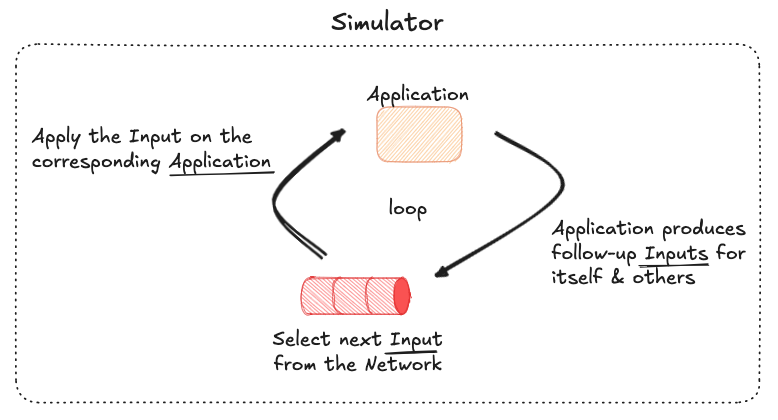

Unlike a conventional replicated state machine, however, all instances of the application are running on the same machine and in the same process. More specifically, another abstraction called a Simulator is the one that orchestrates among the different instances of applications. The simulator starts applications; it runs discrete steps of each of application to enable it to progress; and handles all of the input and output across all applications – including message passing among them.

Intuitively, the simulator acts as a scheduler does in an operating system. In addition, the simulator also routes messages among applications. The output of one application may become the input of another. The networking layer is abstracted in a rudimentary std::sync::mpsc FIFO channel. The following sketch gives a rough idea.

What can the simulator do?

I wrote the simulator to enable quick prototyping. The main outcome it enables for developers is the ability to learn fast in the writing of abstractions, integrations, and proof of concepts using Malachite.

No Docker nonsense needed. No IP addresses, ports, configuration files, private keys, genesis files, nor storage backend configuration needed. I can just focus on the integration with – and the abstractions above – Malachite to prove or disprove some crazy novel use-case. The simulator allows me to throw away a ridiculous amount of boilerplate that are unnecessary when proving new concepts or quick hacks.

Below are two examples of what I did with the simulator, and one prototype I plan to write.

i. Log abstraction

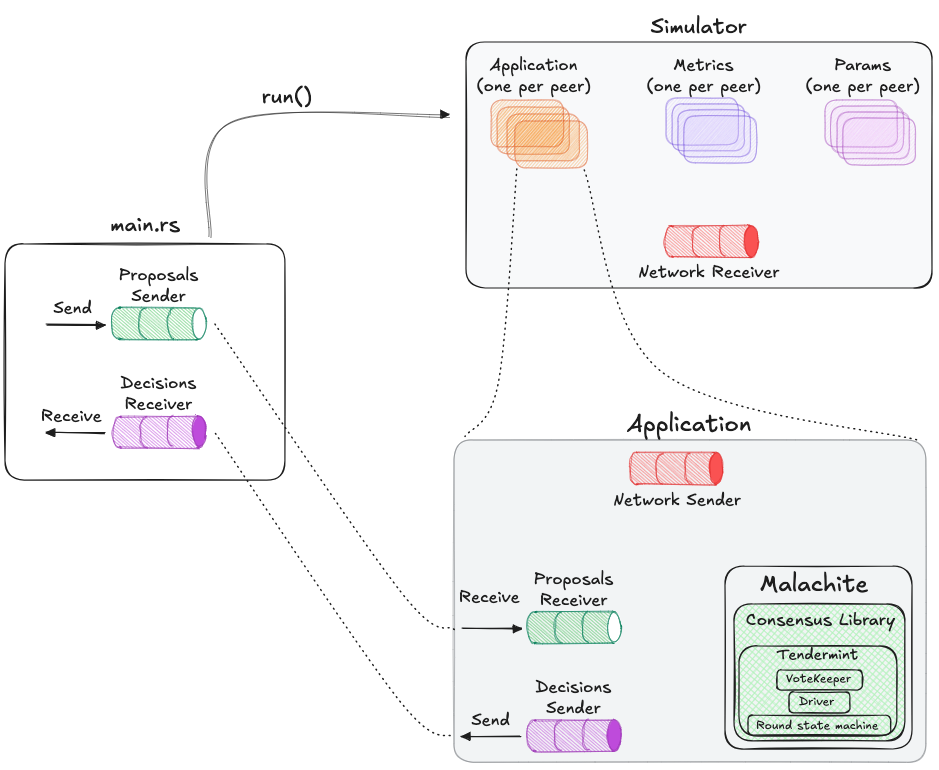

The basic job of the simulator is to run Malachite as if it was a replicated cluster producing a log of decisions using BFT consensus. This is the first thing I coded with the simulator. The log is implemented as a channel using std::sync::mpsc, and every decision that any peer in the system takes will arrive as a new entry on this channel. The complete overview of the system looks as follows. Note the main.rs component on the left, which consumes the log of decisions, called "Decisions Receiver".

This log-based interface is also the only test that exists in that codebase at the moment. The test is here. The main part of the snippet handles creation of proposals, running of the simulator, and consuming of decisions that peers took.

// Create a value to be proposed

proposals.send(BaseValue(proposal))

loop {

// The simulator takes another step

n.step(&mut states);

// Check if the system reached a decision

match decisions.try_recv() {

Ok(v) => {

println!("found decision {} from peer {}", v.value_id, v.peer);

let current_decision = v.value_id.0;

assert_eq!(current_decision, proposal);

peer_count += 1;

if peer_count == PEER_SET_SIZE {

// If all peers reached a decision, quit the inner loop

break;

}

}

// ..

}

}ii. Proof of concept integration with reth

The simulator significantly lowered the barrier for proving a concept on how Malachite could be used as a consensus engine to drive reth, the Rust-based Ethereum execution client. The code is open-source in the repo rem-poc, and emphasizing again that this is just a PoC – a mere toy!

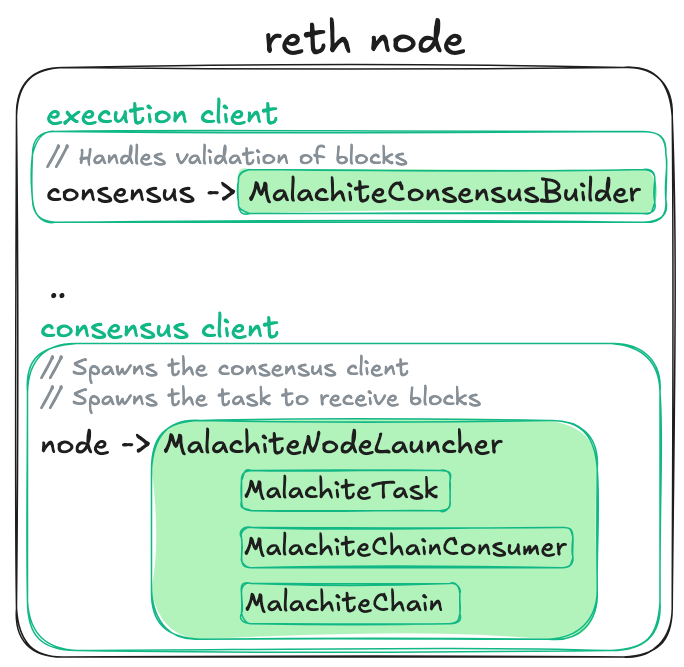

I will write about this integration in more detail in a dedicated post soon. Here I'll just cover the high level architecture at a glance. I modified the code for the reth node in two main places:

- Replaced the type that handles validation in the execution client, so that instead of using the default (

EthBeaconConsensus) mode, we use a dummy one to bypass any validation. I believe this part is actually not key, and the prototype would work with the default consensus validation type, but have not verified this yet. - Replaced the default node launcher to make it spawn two additional tasks. One task is for running the Malachite simulator in the background. I call this task MalachiteChain, and this code is 99% percent based on log-based simulator I described above. The other task is for consuming the blocks that the chain produces and marshal them into something that

rethcan execute. I called this task MalachiteChainConsumer, for lack of a better term.

reth node to accommodate block building (i.e., a consensus client) using the Malachite simulator.This snipped from here shows how the scaffolding looks at the highest level. Most of the complexity is hidden inside MalachiteNodeLauncher and underlying types.

let NodeHandle {

node,

node_exit_future: _,

} = NodeBuilder::new(node_config)

.testing_node(tasks.executor())

.with_types::<EthereumNode>()

.with_components(EthereumNode::components().consensus(MalachiteConsensusBuilder::default()))

.with_add_ons::<EthereumAddOns>()

.launch_with_fn(|builder| {

let launcher = MalachiteNodeLauncher::new(tasks.executor(), builder.config().datadir());

builder.launch_with(launcher)

})

.await?;The most peculiar and unrealistic part about this proof of concept is that the entire blockchain-producing logic (the piece called MalachiteChain) runs in the same process with the execution client. In the normal pattern, we pair one execution client process with one consensus client process. But in this example we have the whole consensus system running within the execution client. A prototype closer to real conditions would not use this assumption. This is the assumption, however, that allowed building this concept in a mere few days.

iii. Future: Multi-proposer Tendermint abstraction

The multi-proposer design would enable the input of multiple peers to define the content of each block. In the vanilla Tendermint design, each block has a single proposer, which controls entirely the content of each block.

One of the earliest examples of a multi-proposer approach is Multiplicity from the Duality team, and later on I dabbled with the idea in the CometBFT codebase, but it led me nowhere because the code was too complicated to modify in a reproducible way. Vote extensions was a new feature of Tendermint, which was released in CometBFT v0.38; This feature allowed application builders to get one step closer to a multi-proposer design, but the APIs are not there yet.

I started toying with the idea of how would a multi-proposer design would look like using the simulator. At first glance, the proof of concept will entail several modifications to the simulator, which I'll describe once I'm done. Please reach out if interested to collaborate or stay in the loop with this effort!

Learn more

For a more comprehensive introduction to malachite-simulator please look at the open-source code and in particular the main readme.md. The code for the reth integration proof of concept is in the repo rem-poc.

References & Links

- I mentioned Malachite in the past in the context of product strategy @Snowboards and Sequencers.

- For a more in-depth discussion of the rationale behind Malachite, I recommend reading this blog from Informal.

- For an intro on state machine replication, see: https://decentralizedthoughts.github.io/2019-10-15-consensus-for-state-machine-replication/

Comments ()